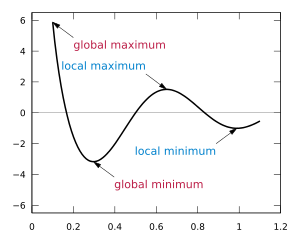

class: center, middle # Optimization .footnote[E. Cabrol, Nov. 2021] --- ## Introduction - Optimization relies on mathematical techniques (hopefully more efficient than intuition) used to minimize or maximize "something". - This "something" is usually called a *cost function* (hence the historical presentation of optimization problems as attempts to find the minimum - rather than the maximum) or *objective function* Example : *minimize the number of times I will open the fridge when preparing dinner* [*] .footnote[[*] Sorry, being french I can't help talking about food] --- ## Mathematical formulation The simplest expression of an optimization problem is $$ min \quad f(x) $$ where $ f $ is the objective function, and $ x $ is a variable. Anyone still reading this document probably knows how to find the minimum of $ x^2-6x $, but if I ask you to minimize $$ x^4+20 x^3-800 x $$ mental calculation won't help anymore. --- ## Choosing the right approach A lot of different algorithms, techniques and softwares exist to solve optimization problems, but if you use the wrong tool for the task, you will end up with nothing. In order to choose the right approach, you must be able to answer a lot of different questions. It is hard to make a hierarchy between these questions, since the resulting classification of this huge[*] family leads to sometimes overlapping categories, but ... let's give it a try ! .footnote[[*] see for example the wikipedia paragraph [major subfields](https://en.wikipedia.org/wiki/Mathematical_optimization#Major_subfields)] --- ## How many variables ? Is $ f $ a function of only one variable $ x $, or is it what we call a multivariate (or multivariable) optimization ? $$ min \quad f(x) $$ $$ or $$ $$ min \quad f(x_1,x_2, ...) $$ --- ## Mathematical programming Is there an analytical expression of the objective function $ f $ ? If so, you are probably able to compute the gradient(s) of the function, which helps finding minima (at least local ones). Due to AI expansion in the past decade, every deep-learning newbie has heard about *gradient descent* ... <img src="./Images/gradient_descent2.png" height="190" width="280"> .center[.small[image borrowed from [lucidar.me](https://lucidar.me/fr/neural-networks/single-layer-gradient-descent/)]] --- ## Single or multi-objective Is the objective function unique ? If not, we talk about multi-objective optimization. Sometimes the problem can be transformed into a single objective one, for example by attributing weights to each individual function. But it is not always possible, neither realistic, in which case solutions are expressed as a set of so-called Pareto optima, which represent trade-offs between conflicting objectives. Keywords : MOO, Pareto, multi-attribute optimization --- ## About constraints Are there any constraints on the variables ? Equality constraints ? $$ g_i(x_1,x_2, ...) = c_i \quad i=1..n $$ And/or inequality constraints ? $$ h_j(x) \geq d_j \quad j=1..m $$ This makes the difference between constrained and unconstrained optimization. Some keywords to look at for constrained optimization : Lagrange multipliers, penalty method ... --- ## About linearity Are both the objective and the constraints linear functions of the variables ? Objective and functions are linear => linear programming. Techniques exist to solve very large problems, with a huge number of variables. See simplex algorithm, column generation, interior point methods, Karmarkar ... Obective quadratic and no constraints : see Newton, quasi-Newton, BFGS Objective quadratic and constraints linear => quadratic programming Objective quadratic and constraints non-linear : can be handled by sequential quadratic programming (SQP) --- ## About discreteness Are the variables continuous, or discrete ? Can $ x $ take any value in the design domain, or is it restricted to a specific set of values ? Are variables required to be integers ? If so, and if the problem is linear, then we deal with *integer linear programming* (ILP) problems. If only some of the variables are integers, we have a *mixed integer linear programming* (MILP) problem. Both ILP and MILP are computationally complex (you probably have heard of NP-hard problems ...) --- ## About convexity If the objective function[*] and the feasible region are convex, it guarantees that a local minimum is also a global minimum. If this is not the case, gradient-based methods can get stuck in local minima and fail to find global ones. Some keywords to look at : simulated annealing, genetic algorithms  .footnote[[*] We assume here that we want to minimize it. If this a maximization problem, the objective function must of course be concave for the problem to be convex] --- ## Resources - [Decision tree for optimization software](http://plato.asu.edu/guide.html) by Hans Mittelman. This resource existed 15 years ago and is still alive. - [Linear Programming (LP) in 2 minutes](https://www.youtube.com/watch?v=C0TTxV0n9OA) and the whole channel **Visually explained** - [Gekko](https://www.apmonitor.com/wiki/index.php/Main/GekkoPythonOptimization), a Python package for "constrained, unconstrained, continuous, and discrete problems".